Abstrarct

| Scene completion | Object completion |

|---|---|

|  |

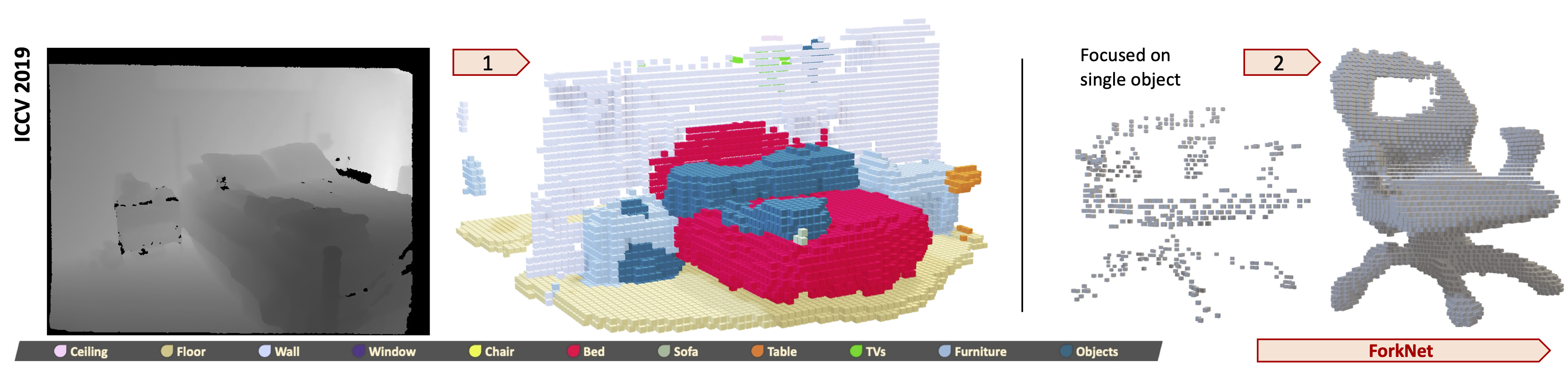

We propose a novel model for 3D semantic completion from a single depth image, based on a single encoder and three separate generators used to reconstruct different geometric and semantic representations of the original and completed scene, all sharing the same latent space. To transfer information between the geometric and semantic branches of the network, we introduce paths between them concatenating features at corresponding network layers. Motivated by the limited amount of training samples from real scenes, an interesting attribute of our architecture is the capacity to supplement the existing dataset by generating a new training dataset with high quality, realistic scenes that even includes occlusion and real noise. We build the new dataset by sampling the features directly from latent space which generates a pair of partial volumetric surface and completed volumetric semantic surface. Moreover, we utilize multiple discriminators to increase the accuracy and realism of the reconstructions. We demonstrate the benefits of our approach on standard benchmarks for the two most common completion tasks: semantic 3D scene completion and 3D object completion.

Methodology

Architecture

The proposed volumetric network architecture for semantic completion relies on a shared latent space encoded from SDF volume x reconstructed from the input depth image. The two decoding paths are trained to generate, respectively, incomplete surface geometry (xˆ), completed geometric volume (g) and completed semantic volumes (s).

The proposed volumetric network architecture for semantic completion relies on a shared latent space encoded from SDF volume x reconstructed from the input depth image. The two decoding paths are trained to generate, respectively, incomplete surface geometry (xˆ), completed geometric volume (g) and completed semantic volumes (s).

(a-b) Downsam- pling and (c) upsampling convolutional layers in our architecture. Note that the two parameters (s, d) in all the functions are the stride and dilation while the kernel size is set to 3.

(a-b) Downsam- pling and (c) upsampling convolutional layers in our architecture. Note that the two parameters (s, d) in all the functions are the stride and dilation while the kernel size is set to 3.

Learning

Graphical models of the 4 data flows (and the associated loss terms) used during training and derived from our architecture figure.

Graphical models of the 4 data flows (and the associated loss terms) used during training and derived from our architecture figure.

Solving practical problems

Solving lack of paired training data

| Synthetic paired data | |

|---|---|

| The synthetically generated SDF volume and the corresponding completed semantic scene. |

Correcting wrong annotations

| Annotation correction | |

|---|---|

| ForkNet corrects the wrong ground truth from SUNCG dataset on the TVs. |

Qualitatives

Semantic scene completion

Object completion

Cite

If you find this work useful in your research, please cite:

@inproceedings{wang2019forknet,

title={ForkNet: Multi-branch Volumetric Semantic Completion from a Single Depth Image},

author={Wang, Yida and Tan, David Joseph and Navab, Nassir and Tombari, Federico},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

pages={8608--8617},

year={2019}

}