Re-direct to the full PAPER

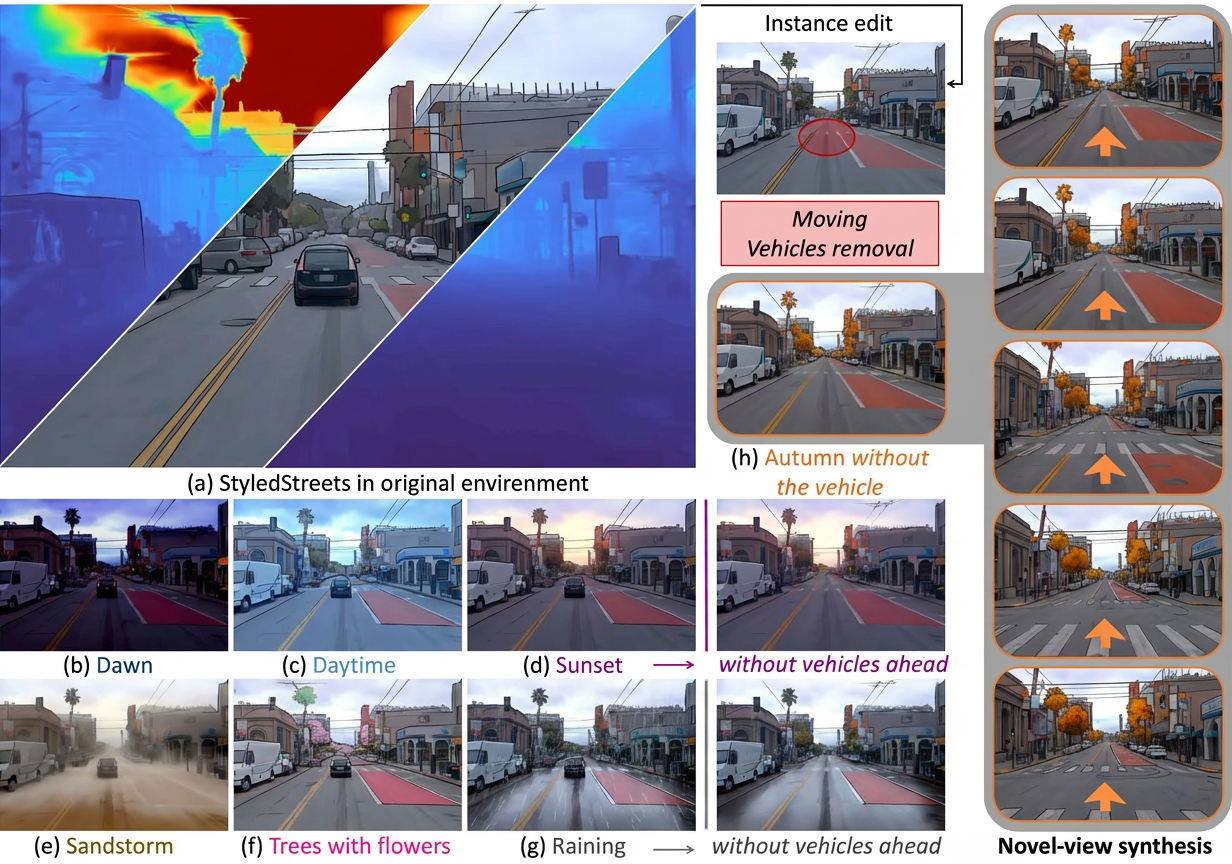

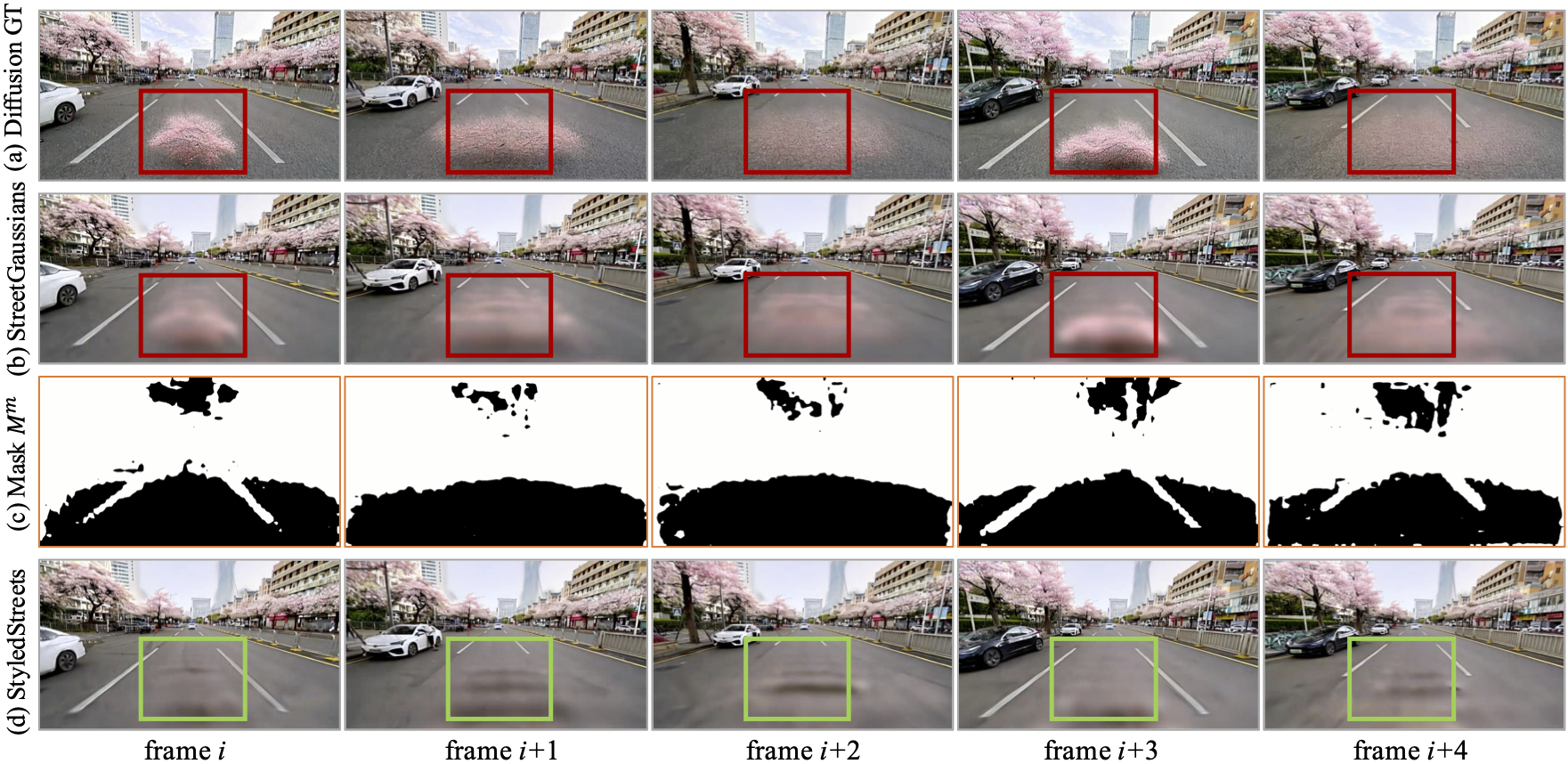

Urban scene reconstruction demands modeling static infrastructure and dynamic elements while maintaining consistency across diverse environmental conditions. We present StyledStreets, a multi-style street synthesis framework that achieves instruction-driven scene editing with ensured spatial-temporal coherence. Building on 3D Gaussian Splatting, we enhance street scene modeling through novel pose-aware optimization and multi-view training, enabling photorealistic environmental style transfer across seasonal variations, weather conditions, and multi-camera configurations. Our approach introduces three key innovations: (1) a hybrid geometry-appearance embedding architecture that disentangles persistent scene structure from transient stylistic attributes; (2) an uncertainty-aware rendering pipeline mitigating supervision noise from diffusion-based priors; and (3) a unified parametric model enforcing geometric consistency through regularized gradient updates.

Methodology

Mitigating Diffusion Ambiguity

Qualitatives

Depth

Novel Trajectories

Multi-cameras

Cite

If you find this work useful in your research, please cite:

@misc{chen2025styledstreetsmultistylestreetsimulator,

title={StyledStreets: Multi-style Street Simulator with Spatial and Temporal Consistency},

author={Yuyin Chen and Yida Wang and Xueyang Zhang and Kun Zhan and Peng Jia and Yifei Zhan and Xianpeng Lang},

year={2025},

eprint={2503.21104},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2503.21104},

}